By: Ihab Ezroura, Lead Cloud Engineering at Mapersive

“Making Computers See What We See!”

Background: Mapersive and the Project Context

Mapersive is a SaaS solution that offers an immersive experience for navigating 360-degree image datasets and collecting spatial data for various purposes. Utilizing state-of-the-art Geo-AI and computer vision algorithms, the platform enables efficient data extraction from these images. Users can click on objects within the 360-degree imagery to compute real-world coordinates and 3D measurements. The platform also supports the export of spatial data into GIS-compatible formats like GeoJSON, Shapefile and KML, making it a valuable tool for analysis and insights.

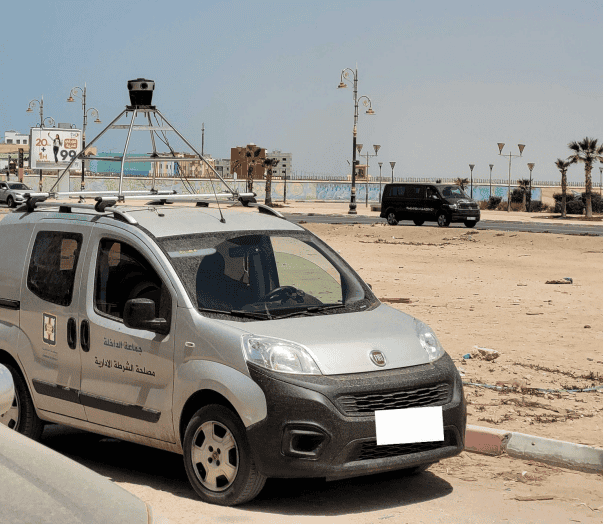

Our recent project with a government entity in Morocco involved collecting 360- degree imagery over a 250 km road network. The goal was to integrate the collected spatial data into the city’s ArcGIS ecosystem, and support decision-makers in urban planning and infrastructure management. The project required capturing detailed images and accurate GPS data, making the Mosaic 51 camera system an ideal choice among the camera providers we currently support in our solution.

Performance Evaluation of the Mosaic 51 Camera System

1. Built-in GPS Accuracy and Performance:

Accurate GPS data is crucial for any urban imagery project. The Mosaic 51 camera system delivered commendable GPS accuracy, maintaining levels between 1.8m and 2.2m across most areas. While there were some spikes in error rates in narrow streets, these instances were infrequent and did not significantly impact the overall data quality. The GPS accuracy was sufficient to meet our client’s needs, ensuring reliable geolocation of spatial entities.

2. Image Quality and Resolution:

The Mosaic 51’s 12K resolution was a standout feature, providing high-resolution imagery crucial for our project. This high resolution enabled Mapersive to identify small entities up to 30 meters away from the street-view vehicle with remarkable clarity. Additionally, the camera’s ability to quickly adjust to dynamic lighting conditions ensured consistent image quality throughout the data collection process. This adaptability was particularly beneficial in an urban setting with varying lighting scenarios.

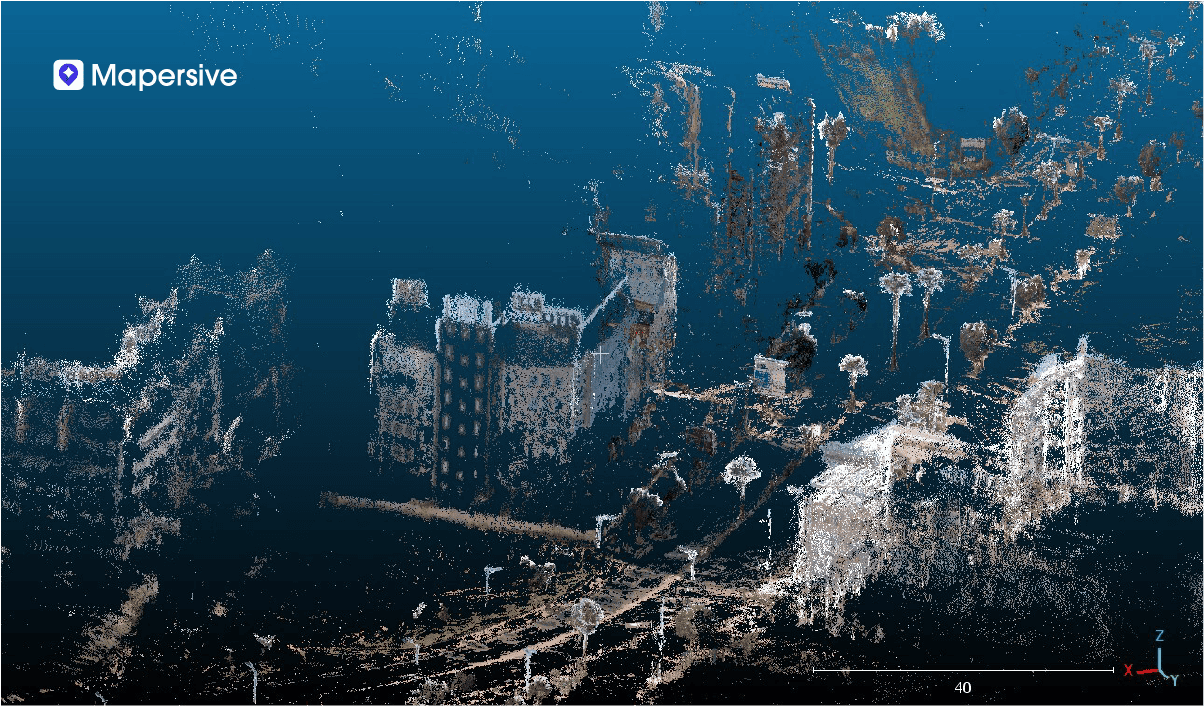

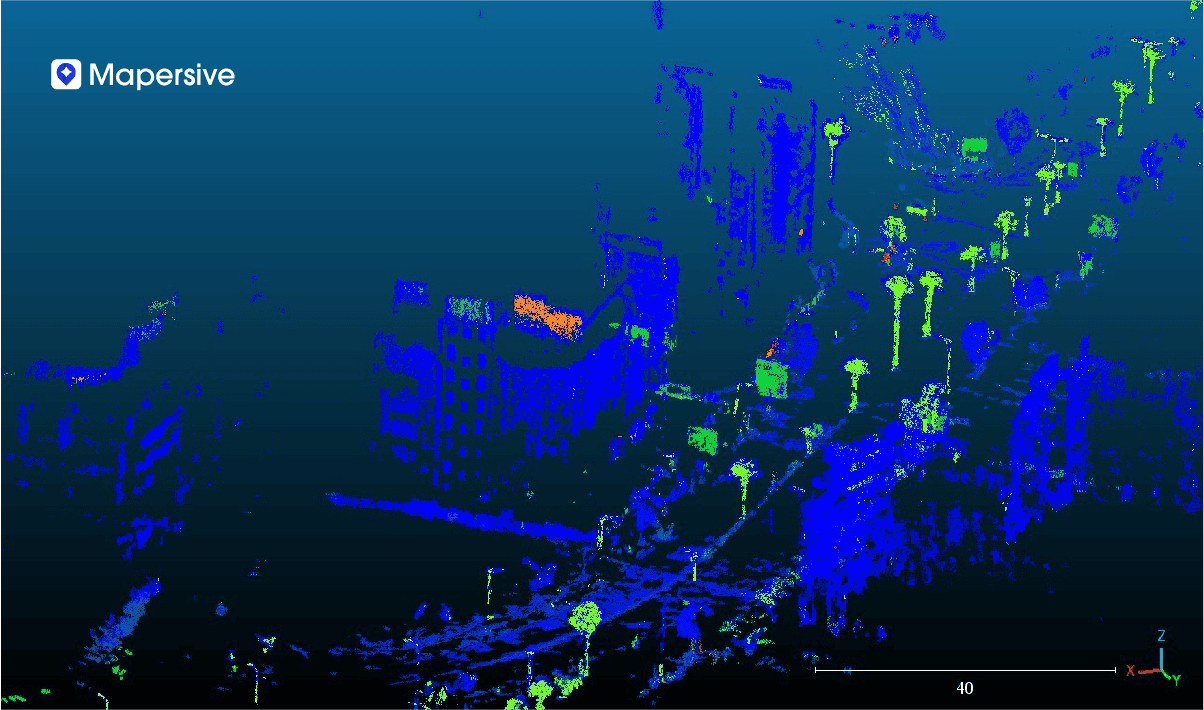

3. 3D Reconstruction and Spatial Data Accuracy:

One of the key advantages of the Mosaic 51 camera system was its contribution to 3D reconstruction. Our platform leverages cutting-edge 3D reconstruction algorithms and computer vision models to create detailed digital twins of urban landscapes using 360-degree imagery and GPS data.

The high resolution of the Mosaic 51 facilitated the creation of these digital twins with adequate detail, allowing us to accurately estimate and adjust spatial data. The spatial data extracted from the digital twins achieved an average absolute accuracy of 2 meters for the entities, with sub-metric relative accuracy for dimensional computations such as surface areas, heights, and distances. This level of precision was instrumental in meeting our project requirements and providing valuable insights to the city government.

Use Case: Impact and Integration

The spatial data collected using the Mosaic 51 camera system was integrated into the city’s ArcGIS ecosystem, enabling the government entity to gain insights into over 50,000 spatial entities. These included:

- Lamp posts

- Potholes

- Traffic signs

- Advertisement panels

- Commercial establishments

- Historical sites

- Urban infrastructure

The ability to visualize and analyze this data in a GIS platform facilitated informed decision-making and efficient urban management. The project demonstrated the effectiveness of combining high-resolution imagery with advanced spatial data extraction and analysis tools.

How are Mapersive customers using Mosaic imagery data?

How do you create a 3D reconstruction in Mapersive?

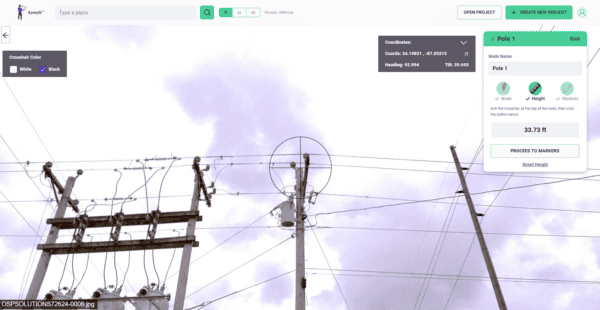

Mapersive gives users the ability to compute the location and dimensions of any object they can see in their image sequences, which are called virtual tours or just “Tours”. Mapersive’s tour navigator provides users the option to select one of the following data extraction modes:

- Point extraction

- Distance extraction

- Height extraction

- Vertical surface extraction

- Horizontal surface extraction

Breaking it down, step by step:

To use the extraction functionalities:

- The user first selects the extraction mode they would like to use.

- The user selects within the navigator the object whose location they would like to compute.

- The user needs to select the same object in two separate scenes where it is visible. These two perspectives are then fed to Mapersive’s Geo-AI algorithms to compute the 3D coordinates of the object, which does not take more than a few seconds.

- The final step of the process is the feature annotation stage, where the user manually fills in custom metadata related to the extracted object, which is followed by annotating the object with a feature class taken from a comprehensive list of object categories usually found in urban/rural environments.

What features can be extracted?

The platform includes nearly 3000 unique feature references, categorized into different domains.

These object categories are those with which the user can annotate manually extracted objects. Due to the robust list of 2556 unique feature references, I have included a list of main feature types and subtypes only:

- “Road Signs” (this list pertains only to those for North America, as described in the Federal Highway Administration MUTD):

- “Bicycle Facility and Shared-Use Path Signs”

- “Destination and Distance”

- “Expressway Signs”

- “Marker Signs”

- “Miscellaneous”

- “Regulatory Signs”

- “School Signs”

- “Warning Signs”

- “Lighting”

- “Street Lighting”

- “Traffic Signals”

- “Vegetation”

- “Ornamental Plants”

- “North American Trees”

- “Utilities”

- “Parking”

- “Public Facilities”

- “Sanitation”

- “Sewage”

- “Utilities infrastructure”

- “Utility Poles”

- “Water Outlet”

- “Miscellaneous”

- “Association”

- “Commercial Industry”

- “Electric pole”

- “Environmental hotspot”

- “Financial Services”

- “Flag”

- “Liberal profession”

- “Service area”

- “Vegetation”

- “Bench”

- “Building”

- “Company”

- “Educational Services”

- “Factory”

- “Health and wellness”

- “Place of worship”

- “Public Administration”

- “Telecom pole”

- “Transportation”

- “Flags”

- “Enclosures”

- “Bollards”

- “Permanent Fences”

- “Permanent Walls”

- “Temporary Barriers”

- “Temporary Enclosures”

- “Road Surface”

- “Road Damage”

- “Road Markings”

The workflow also includes the option of taking a screenshot of the extracted object, which then becomes part of the object’s attributes and can be exported alongside its metadata for added context.

How do you take measurements of objects?

To take measurements of objects

- The user can select the other extraction methods mentioned above.

- The user draws the selected geometry over the object in two separate scenes where it is visible (similarly as detailed above).

- The extraction tools allow the user to easily draw lines (for heights and distances) and polygons (for horizontal and vertical surfaces), which are then fed to Mapersive’s algorithms to compute things like the length or surface area of the object in either meters or feet.

The Role of AI and Computer Vision:

Automatic object detection of feature classes:

Mapersive’s computer vision algorithms are able to automatically recognize objects while processing the images. Automatic detections are used within the processing workflow in the following processes:

- Enhancing the accuracy of the 3D reconstruction generated from the image sequence, thereby improving the precision of location data during object extraction. This is achieved by giving more importance to certain image segmentation classes as opposed to others while establishing image correspondences within the sequence.

- Blurring Personally Identifying Data (license plates and people)

- (in progress) “Hot Spot” identification: We are working on a feature that will display where different object classes have been spotted within image sequences in order to orient users towards “hot spots” where specific classes have been spotted.

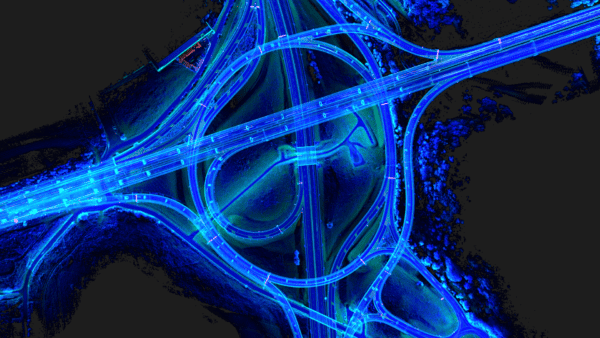

Building 3D Point Clouds without LiDAR

The spatial computations on top of which the data extraction tools are built are performed on 3D point clouds generated by Mapersive’s GeoAI algorithms. This allows Mapersive to reconstruct and understand scenes spatially without needing advanced LiDAR scanning equipment.

The user is therefore only required to upload image sequences that are appended with GPS data. The algorithms then mathematically approximate the camera pose and orientation of the camera system used to collect the image sequence, in addition to calculating camera parameters like focal length.

Finally, a point cloud is generated from the image data and projected onto an absolute coordinate system to allow the scale to be conserved in the point cloud. The point cloud data then serves as the backbone of all the spatial computations the user performs on their tours.

Integrating Data into ArcGIS

Integrating the data collected through Mapersive into GIS software like the ArcGIS ecosystem is achieved through the Layer export interface. The user can apply a wide variety of filters to all the data in their workspace if they wish to download specific layers.

For example, the user can select which specific “tours” to download objects from, which “business units”, a date range during which the data has been collected, as well as specific metadata values like object type, feature type and subtype, and geometry.

After the data is filtered according to the user’s needs, the user can choose to download their data in either Shapefile (which is the best file format for use with the ESRI ecosystem), KML for Google Earth Engine, or GeoJSON.

Conclusion

Our experience with the Mosaic 51 camera system has been overwhelmingly positive. Its exceptional GPS accuracy, high-resolution imagery, and adaptability to dynamic lighting conditions have made it a reliable tool for large-scale urban image collection. The detailed 3D reconstructions and accurate spatial data extractions have exceeded our client’s expectations, proving the Mosaic 51 to be an excellent investment for similar projects.

Recommendations

Given our successful experience, we recommend the Mosaic 51 camera for:

- Projects requiring high-resolution imagery for detailed spatial analysis.

- Urban environments where accurate GPS data is critical.

- Applications involving 3D reconstruction and GIS integration.

Mosaic’s support and the camera’s performance have significantly contributed to the success of our projects, and we look forward to continuing our collaboration with Mosaic in future endeavors.

Read more about Mapersive and their work here:

→ Revolutionizing Urban Planning & Infrastructure Development