Decoding Image Resolution: Why Every Pixel Counts

Image resolution isn’t just about the number of pixels a camera can capture. As Jeffrey Martin, Mosaic CEO, explains:

“The number of megapixels isn’t the whole story. You need to look at the lens quality and how well the sensor and lens are matched to produce sharp, clear images with minimal distortion.”

In this article, we’ll be drawing from the expertise of Jeffrey Martin, an acclaimed photographer and the CEO of Mosaic. As a pioneer in 360-degree imaging, Jeffrey has pushed the boundaries of digital imagery, creating the largest gigapixel images in the world. His hands-on experience with camera systems positions him as an authority on the technicalities of image resolution, sensors, and lenses, which we’ll explore throughout this article.

Image resolution is the linchpin of digital imaging, dictating the crispness of a photograph and the accuracy of satellite imagery. It’s what makes some images pop with vibrancy and detail, while others fade into obscurity. Unlike at any point in history, our world is captivated by visuals (and capable of producing them at great scale), the sharpness of an image is pivotal, not just for professionals and enthusiasts but for anyone navigating the digital world.

- Why can two cameras with the same number of megapixels produce vastly different image qualities?

- How does resolution affect our ability to keep personal data private in a photo?

- What role does resolution play in cutting-edge machine learning applications?

- Can a higher number of pixels compromise the anonymity in images?

- Why is the resolution critical in distinguishing a stop sign from a pedestrian in autonomous vehicles?

You can explore more about Jeffrey’s work both here on Mosaic’s website or on his personal site, where he continues to push the boundaries of gigapixel and 360-degree imaging.

By the end of this article, you’ll not only grasp the essence of resolution in imaging but also appreciate its profound implications in technology, privacy, and beyond.

And if you’re here reading this, then you might want to learn about our offer of a free data set including 15 TERAPIXELS of stunning imagery of our home city of Prague for research needs. Check it out here and write in if you are interested in obtaining the data set.

Mosaic REALMAP: Explore Prague in Unprecedented Detail with 1.26 Million Images

Now let’s get into it!

Types of Resolution in Imaging

There are various types of resolution, including temporal resolution, spectral resolution, angular resolution, color resolution and bit resolution, but for purposes of this article we will address on the following two:

Spatial Resolution refers to the amount of detail an imaging system can capture in a given area, often measured in pixels per unit distance (e.g., pixels per meter). Higher spatial resolution allows finer details to be observed, which is crucial in applications such as urban planning, geospatial mapping, and surveying, where understanding small-scale features like road cracks, street signs, or building structures is essential. In 360-degree imagery, higher spatial resolution ensures that the entire panoramic image holds detail uniformly across its entire surface, preventing loss of information in critical areas.

Radiometric Resolution or Dynamic Range, on the other hand, refers to the sensitivity of an imaging system to different intensities of light, determining how finely an imaging sensor can distinguish differences in brightness levels. This is particularly important in geospatial and surveying applications when capturing surfaces with varying light intensities—such as shadowed versus sunlit areas—or when working in conditions where light changes frequently, such as overcast skies or dense urban environments. In 360-degree panoramic imagery, radiometric resolution ensures that the camera captures a wide dynamic range, which allows details in both dark and bright regions to be preserved without losing texture or clarity.

Examples of Spatial and Radiometric Resolution in 360-Degree Imagery:

Spatial Resolution in 360-Degree Imagery

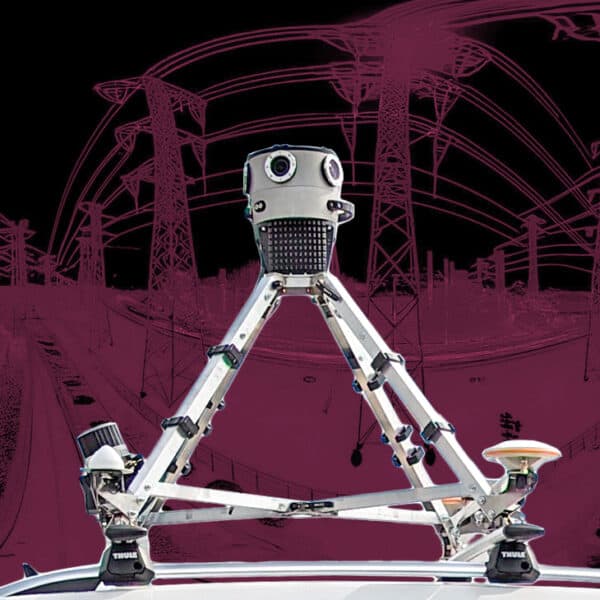

In urban mapping and infrastructure monitoring, cameras with high spatial resolution, like the Mosaic X camera system, can capture intricate details such as street markings, road conditions, and signage. When creating a 3D map of an area, every detail counts—higher spatial resolution ensures that even the smallest features are represented accurately in the final image. For instance, when capturing a panoramic view of a street, you need the spatial resolution to identify objects at varying distances from the camera with minimal distortion.

Radiometric Resolution (dynamic range) in 360-Degree Imagery

Radiometric resolution becomes important in environments where the lighting conditions vary significantly. For example, when capturing a panoramic street view in a city, some parts of the image may be in direct sunlight while others are in deep shadow, particularly around buildings. A camera with high radiometric resolution can better balance these contrasts, preserving details in both the bright and shadowed areas. This is essential in applications like infrastructure inspections, where detecting issues (such as cracks in shaded building walls) requires capturing all brightness levels accurately.

For example, two cameras may both claim to have 12K resolution, but the image quality can differ dramatically. Jeffrey Martin notes:

“’You might have an image sensor that’s 200 megapixels, but the lens may not resolve all that detail. You end up with images that aren’t as sharp as those taken with a lower-megapixel camera but a better lens.”

For example: the Ladybug6 camera has 12,288 x 6,144 (72 MP native resolution)

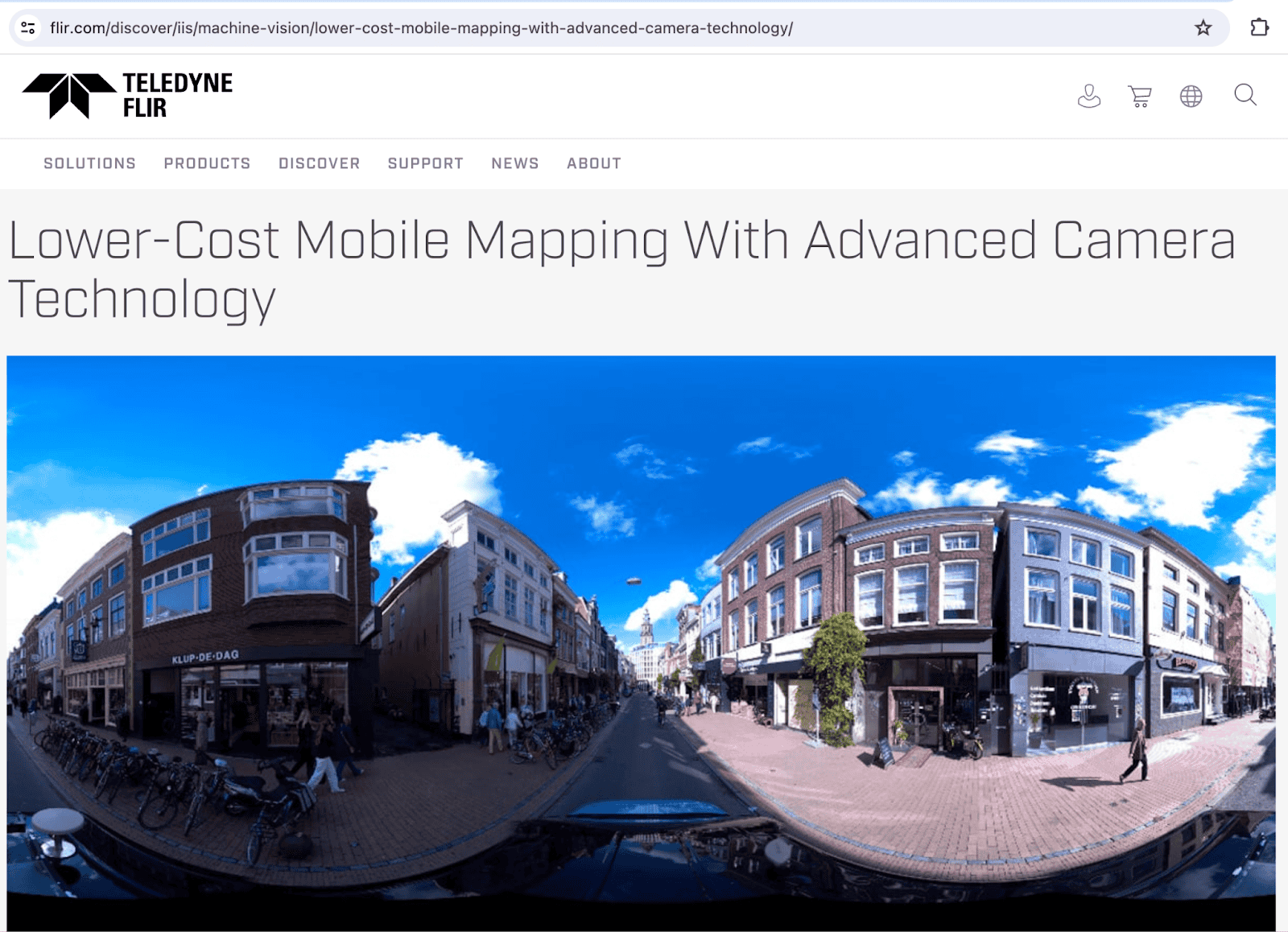

Panoramic image from the Ladybug6 camera (screenshot from Teledyne’s website for Ladybug6) showing poor color balance, bad dynamic range, dark shadows and blown out highlights. Image source

————–

The Mosaic X camera system similarly has:

12.32 MP x 6 = 74 MP native resolution

But the equirectangular resolution (2:1 panorama resolution) is:

13504 x 6752 = 91.2 MP

Yet the results are evident:

The Mosaic systems deliver sharper and more detailed imagery, essential for precise mapping and data analysis. Image source (Mosaic X camera data from London 2023). Contact us to get this data set.

To learn more about some of the cameras with similar resolution according to MP, but with varying image quality, see here →

Image Quality and Resolution

How Resolution Impacts Image Quality

The resolution of an image fundamentally dictates its quality by determining the amount of detail it can visually represent. High-resolution images can reveal subtleties and textures that are imperceptible in lower-resolution counterparts. For instance, in a high-resolution photograph of a forest, you might discern individual leaves and the roughness of the bark, whereas, in a lower-resolution image, the trees might blur into a green mass without distinction.

The Mosaic X camera system exemplifies how resolution, sensors, and lenses come together to deliver unparalleled image quality. Each camera is equipped with Sony 1-inch global shutter sensors and lenses carefully matched to ensure that every pixel is sharp, with minimal distortion. As Jeffrey Martin explains, “If there’s too much overlap between sensors, you waste pixels.” The result is a finely balanced panoramic image with 13.5K resolution that sets Mosaic apart from competitors.

By finding the perfect balance between sensor overlap and resolution, the Mosaic X delivers images with both sharpness and efficiency.

Have you always had burning questions about photogrammetry? We have an article for that, written by Jeffrey Martin himself:

Everything you wanted to know about photogrammetry but were afraid to ask

Factors Contributing to Perceived Image Clarity

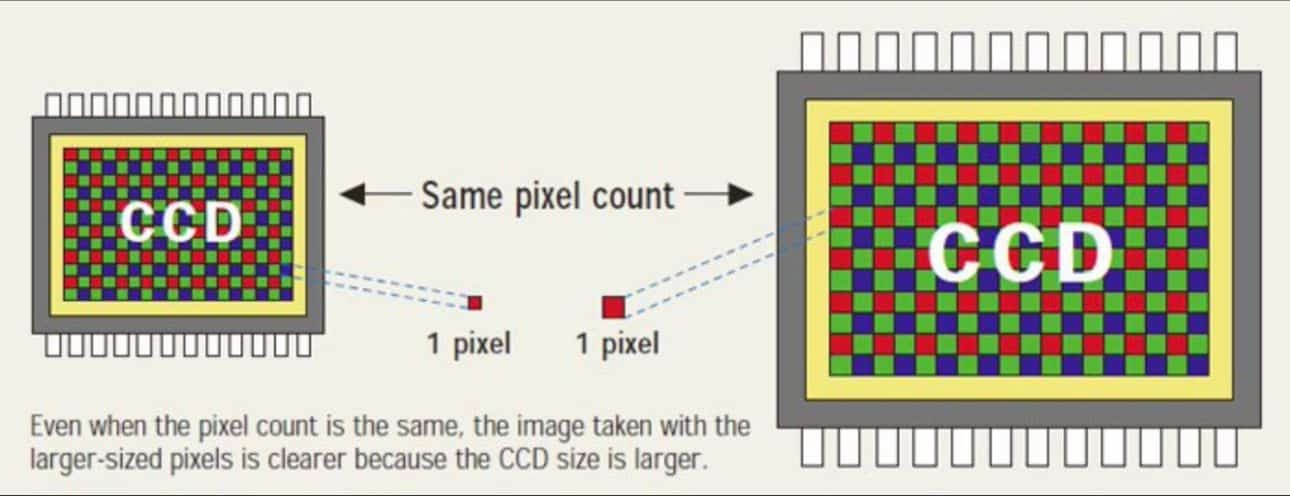

While resolution is a key factor in image quality, the overall clarity of an image is influenced by multiple components. The size and quality of the sensor, as well as the lens, play pivotal roles in how sharply details are captured. Larger sensors generally provide better low-light performance, as they can capture more light photons, reducing noise levels. However, as pixel sizes shrink to accommodate higher resolutions, challenges such as increased noise and heat can arise, particularly in low-light conditions.

As the demand for higher-resolution aerial imagery increased, manufacturers began reducing pixel sizes, which led to these challenges. Advances like backside-illuminated sensors, improved noise reduction algorithms, better lenses, and enhanced post-processing techniques have helped maintain image quality, despite the smaller pixels in modern cameras.

This shift reflects the balance between maintaining image quality and improving resolution efficiency in modern digital imaging. More details on how these advancements impact aerial photography can be found in this aerial photography and photogrammetry discussion.

Image Credit: Linkedin post from Arkadiusz Szadkowski

For more information, check out this article from Cambridge in Colour explains, sensor size and quality play a crucial role in determining image clarity, dynamic range, and low-light performance.

The Role of Pixel Density in Image Sharpness

Pixel density, often measured in pixels per inch (PPI), is integral to image sharpness, especially when viewing images on physical displays or prints. Higher pixel density means more pixels are packed into each inch, leading to a smoother, more cohesive image where individual pixels are less discernible. This is particularly important in media where the viewer is in close proximity to the image, such as smartphones and high-quality prints, where the human eye can distinguish finer details.

It’s not just about having a high pixel density—those pixels need to deliver sharp, detailed images across the entire frame.

“With our Mosaic 360 camera systems, we’ve matched the lenses and sensors carefully to ensure that every pixel you get from the image sensor is sharp, with very little to no chromatic aberration,” Martin notes.

Beyond Resolution: Factors Influencing Image Clarity

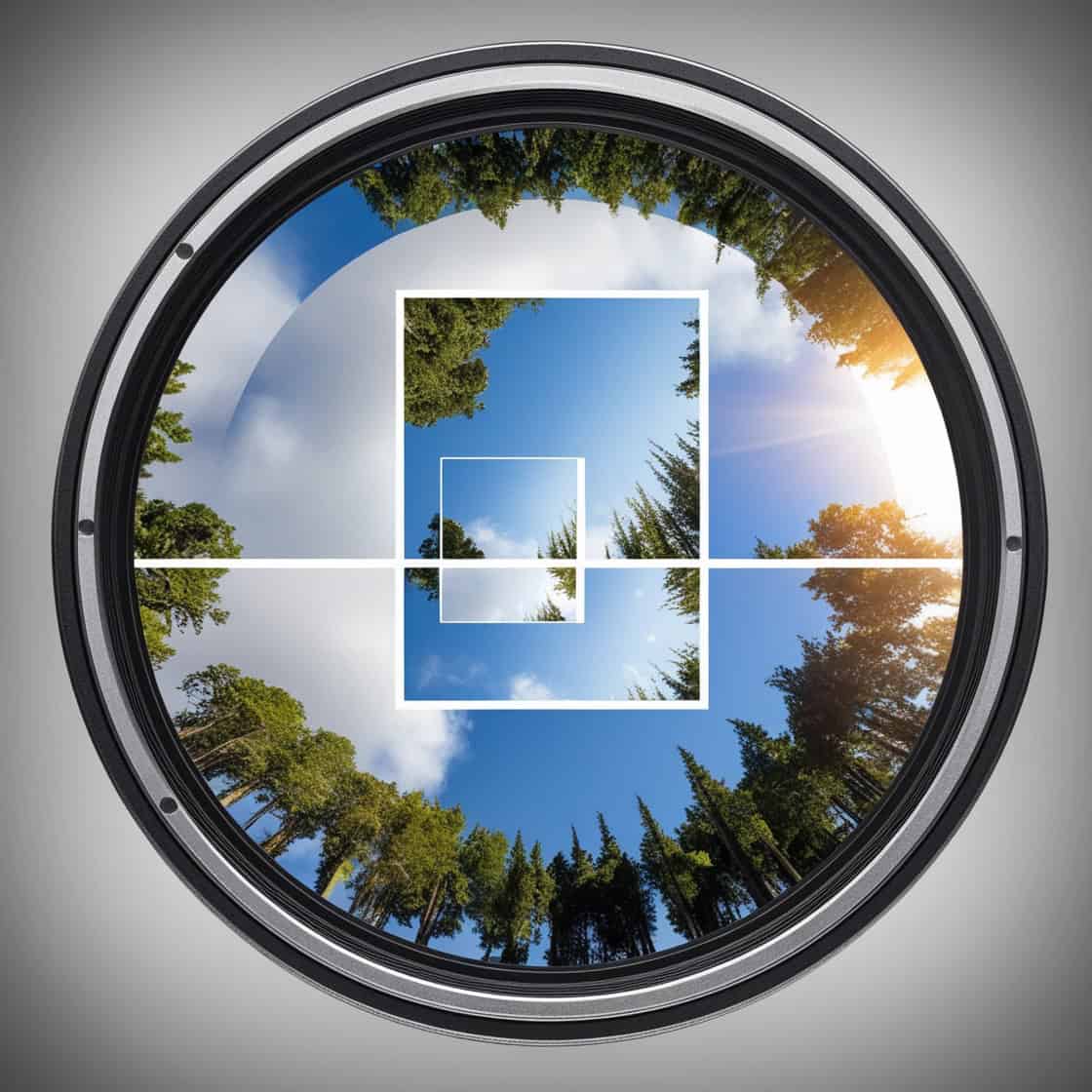

Mosaic made the bold choice to use fisheye (f-theta lens projection lenses in their cameras, deviating from the industry norm, which are rectilinear lenses.

Jeffrey Martin’s experience with high-resolution imaging also extends to lens selection. While many imaging systems use traditional rectilinear lenses, Mosaic’s 360° cameras use fisheye (f-theta) lenses to ensure consistent resolution across the entire frame.

“We chose fisheye lenses because they offer better resolution consistency from the center of the image to the edges, which is crucial for stitching 360° panoramas,”

Martin notes.

Traditional rectilinear lenses, while preserving straight lines, can cause stretching and image distortion at the edges. In contrast, fisheye lenses, despite their curved lines, maintain more consistent sharpness across the image, which is particularly important when stitching multiple images together for a seamless panorama.

This prevents one area of the image from appearing sharper than another, a common issue with traditional pinhole lenses.

The choice of lens, like Mosaic’s decision to use fisheye lenses, greatly affects image quality and consistency across the frame. This B&H article on lens distortion offers a deeper dive into the trade-offs between wide-angle lenses and image accuracy, especially for 360° photography.

Rolling Shutter vs. Global Shutter Sensors

The choice between global and rolling shutter sensors is crucial. The Mosaic X camera system employs global shutter sensors, which capture every pixel simultaneously, ensuring precise geometry. This makes them ideal for tasks requiring high precision, like 3D reconstruction. In contrast, the Mosaic 51’s rolling shutter sensors capture images line by line, offering better dynamic range and lower noise for less complex tasks like street-view documentation.

Learn more about the pluses and minuses of both sensors here →

“If you’re mainly capturing streets and infrastructure, the Mosaic 51, with its rolling shutter sensors, will perform exceptionally well,” Martin explains. “But for more complex tasks that require image precision and geometry, the global shutter sensors in the Mosaic X are a better fit.”

Lens Quality and Diffraction Limits

The lens is the eye of the camera, gathering light and focusing it on the sensor. High-quality lenses with superior optical elements minimize aberrations and maximize sharpness across the image.

Every lens has diffraction limits that affect the maximum amount of detail it can resolve, especially at small apertures. The careful selection of lenses in Mosaic’s cameras ensures that diffraction is minimized, providing optimal sharpness throughout the image frame.

As Martin points out,

“We’ve matched the lenses and sensors to each other very carefully so that the lens offers the best possible resolving power for the sensor.”

This matching ensures that every pixel in the image is sharp, with little to no chromatic aberration. The result is an even distribution of resolution, from the center to the edges, which is especially important when capturing large-scale panoramas or urban areas where every detail counts.

Check out this Q&A with Jeffrey Martin and Lynn Puzzo from Mosaic as they break it down →

Sensor Size and the Role of Light Sensitivity

Larger sensors generally capture more light and have better light sensitivity, contributing to lower noise levels and higher image quality at a given resolution. This is because larger sensors can have larger pixels or more pixels, which can gather more light and produce images with better dynamic range and depth of field, leading to clearer, more detailed photos.

Image Processing and Compression Artifacts

The way an image is processed, whether in-camera or post-capture, can significantly impact its clarity. Noise reduction and sharpening algorithms can either enhance an image or degrade it if overused. Furthermore, compressing an image to reduce file size can introduce artifacts, such as blockiness or banding, which can obscure details and make the image appear less clear, regardless of its resolution.

The Significance of Dynamic Range

Dynamic range—the range from the darkest to the brightest elements that a camera can capture—greatly affects perceived clarity. A higher dynamic range allows for more details in the shadows and highlights, which can make an image appear more vivid and detailed. Cameras with limited dynamic range might lose details in these areas, resulting in images that can feel flat or washed out.

This Adorama article provides a comprehensive explanation of dynamic range and its importance in modern digital photography, particularly in high-contrast environments.

Input vs. Output Resolution in Imaging Systems

Definition of Input and Output Resolution

Input resolution is the amount of detail captured by the camera’s sensor, often determined by the sensor’s megapixel count. Output resolution is the resolution of the final image after processing, which may be different from the input resolution due to scaling, cropping, or other manipulations.

How Camera Arrays Affect Resolution

In systems with multiple cameras, such as 360° cameras, each sensor captures a portion of the scene. The input resolution from each camera contributes to the overall resolution of the final stitched image. However, the output resolution is often not a straightforward sum of the input resolutions due to overlaps and the need for blending in the stitching process.

Learn more about the technologies fueling Mosaic’s industry-leading camera systems:

The technologies behind Mosaic cameras – the new industry standard

Challenges in Image Stitching and Alignment

Stitching is the process of merging multiple images to create a seamless panorama, essential for applications like 360-degree photography. However, achieving a flawless stitch involves managing sensor overlap to avoid issues like visible seams or misalignments, which can result in ghosting or blurring. These imperfections detract from the clarity of the final image, making the precision of the stitching process crucial.

A key challenge is finding the right balance in sensor overlap. Too much overlap wastes pixels and reduces the final resolution, while too little can lead to visible seams in the stitched image. As Jeffrey Martin, Mosaic CEO, explains:

“Too much overlap wastes pixels, but too little overlap can result in visible seams in the final panorama. We’ve made the appropriate trade-off to have enough overlap for stitching without sacrificing resolution, resulting in a 13.5K panoramic image that exceeds many similar cameras on the market.”

By carefully calibrating sensor placement, Mosaic cameras consistently produce high-resolution, fully stitched panoramas with sharpness across the entire frame. This precision makes the images ideal for applications such as surveying, infrastructure documentation, and urban planning, where accuracy is paramount.

For more on the technical process of image stitching and photogrammetry, refer to this Pix4D guide, which explains how images are processed and stitched to create 3D models and detailed panoramic views.

Native Resolution vs. Equirectangular Resolution

Explanation of Native Resolution

Native resolution is the actual, inherent pixel count of a camera sensor. It’s the fixed number of horizontal and vertical pixels that capture the image data, directly influencing the detail that can be discerned in the image taken by that camera. High native resolution sensors capture more detail, allowing for finer zoom and larger prints without loss of image quality.

Understanding Equirectangular Panoramas

Equirectangular projection is a method for mapping a spherical surface onto a two-dimensional plane, commonly used for 360° panoramas. It stretches and warps the image to maintain the correct aspect ratio, which allows it to be wrapped onto a sphere for immersive viewing experiences.

The Process of Combining Multiple Resolutions

Combining the resolutions of multiple camera sensors to create a single equirectangular image involves aligning and stitching together various images, adjusting for differences in exposure, color, and angle. The resolution of the final panorama is often higher than that of individual images due to the cumulative pixel count, but the quality of the stitch can affect the uniformity of resolution across the panorama.

Resolution in the Context of Privacy and Machine Learning

High-Resolution Implications for Privacy

High-resolution images capture a great deal of detail, which can inadvertently include sensitive personal information. In contexts where privacy is a concern, such as street photography or surveillance, high resolution can pose a risk by making individuals or private property identifiable.

The Role of Resolution in Image Anonymization Techniques

Anonymization techniques often involve reducing the resolution of certain image areas to obscure identifying features while maintaining enough detail for the image to be useful. This balance is crucial in fields like medicine, where patient anonymity must be preserved without compromising diagnostic details.

How Machine Learning Algorithms Utilize Resolution for Image Analysis

Machine learning algorithms depend on high-resolution images for accurate analysis, as more data points (pixels) can improve the identification, segmentation, and classification of objects within images. However, higher resolutions require more computational power and can introduce privacy concerns.

The Balance Between Resolution and Privacy in Different Applications

The trade-off between image resolution and privacy is a delicate balance. Applications that rely on facial recognition or license plate reading may need high-resolution images to function accurately, but this comes with heightened privacy risks. Conversely, public mapping services might reduce resolution to anonymize individuals, balancing utility with privacy.

Conclusion

As we continue to push the boundaries of imaging, it’s clear that true resolution is not just a number.

As Jeffrey Martin highlights,

“True resolution isn’t just about pixel count. It’s about how sensors, lenses, and post-processing come together to create sharp, reliable images for critical applications.

It’s about how all the components—sensors, lenses, and processing—work together to deliver sharp, accurate images that can be trusted for critical applications like surveying and 3D reconstruction.”

Mosaic’s commitment to matching these components ensures their cameras lead the industry in delivering high-quality imagery for professionals across a variety of fields.

To learn more about Martin and how he has built Mosaic, check out these two interviews with him over the years.

Meet Mosaic’s CEO Who’s Pushing Limits and Shaping 360 Camera Technology

Why are Mosaic 51 Camera Systems so Good?

Looking to learn more about Mosaic, our cameras and what high resolution and a clear image can do for you and your company?